INTERSPEECH 2020

Multimodal Target Speech Separation with Voice and Face References

| Leyuan Qu✝ | Cornelius Weber | Stefan Wermter |

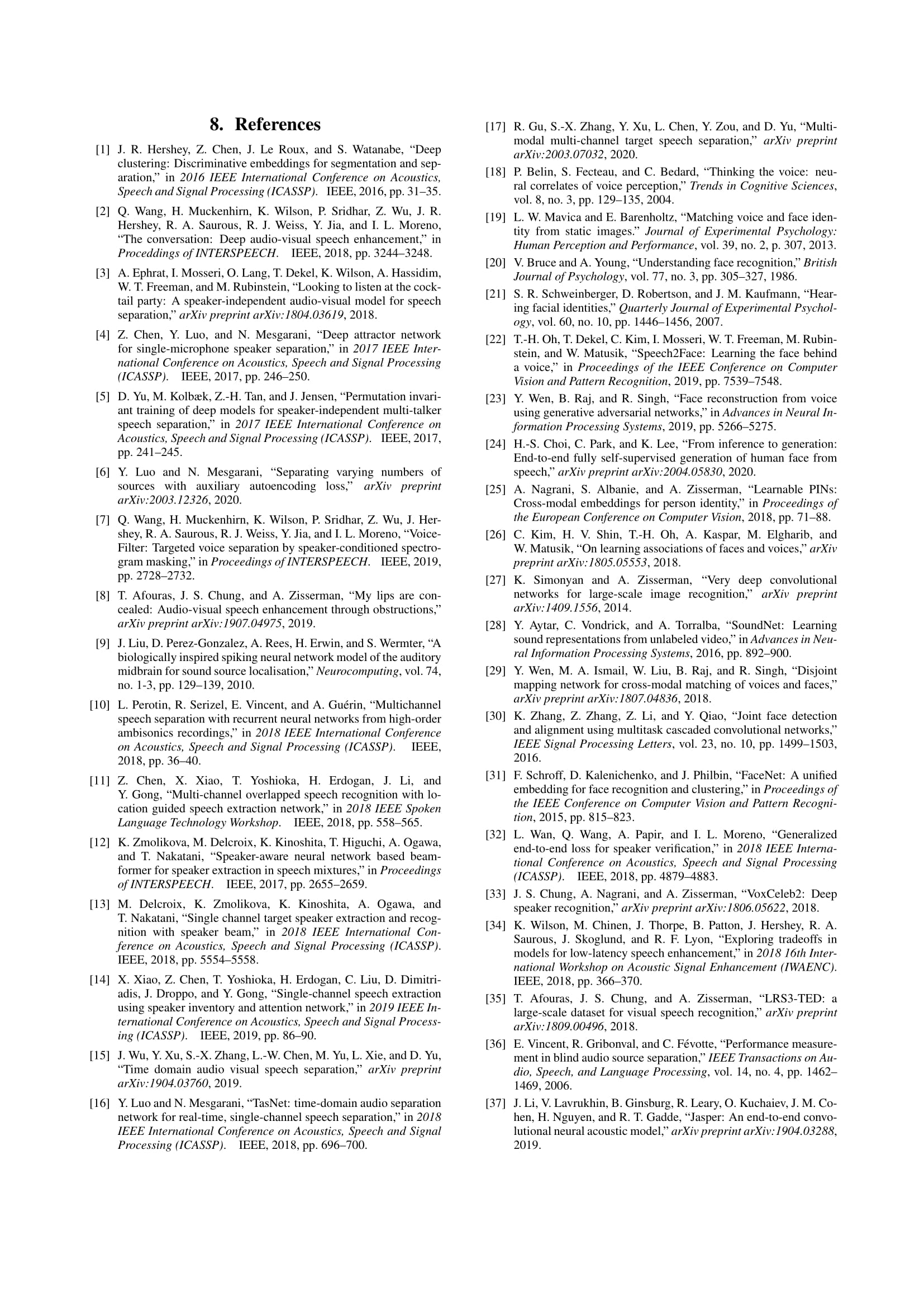

| Knowledge Technology Group (WTM) | Department of Informatics, University of Hamburg |

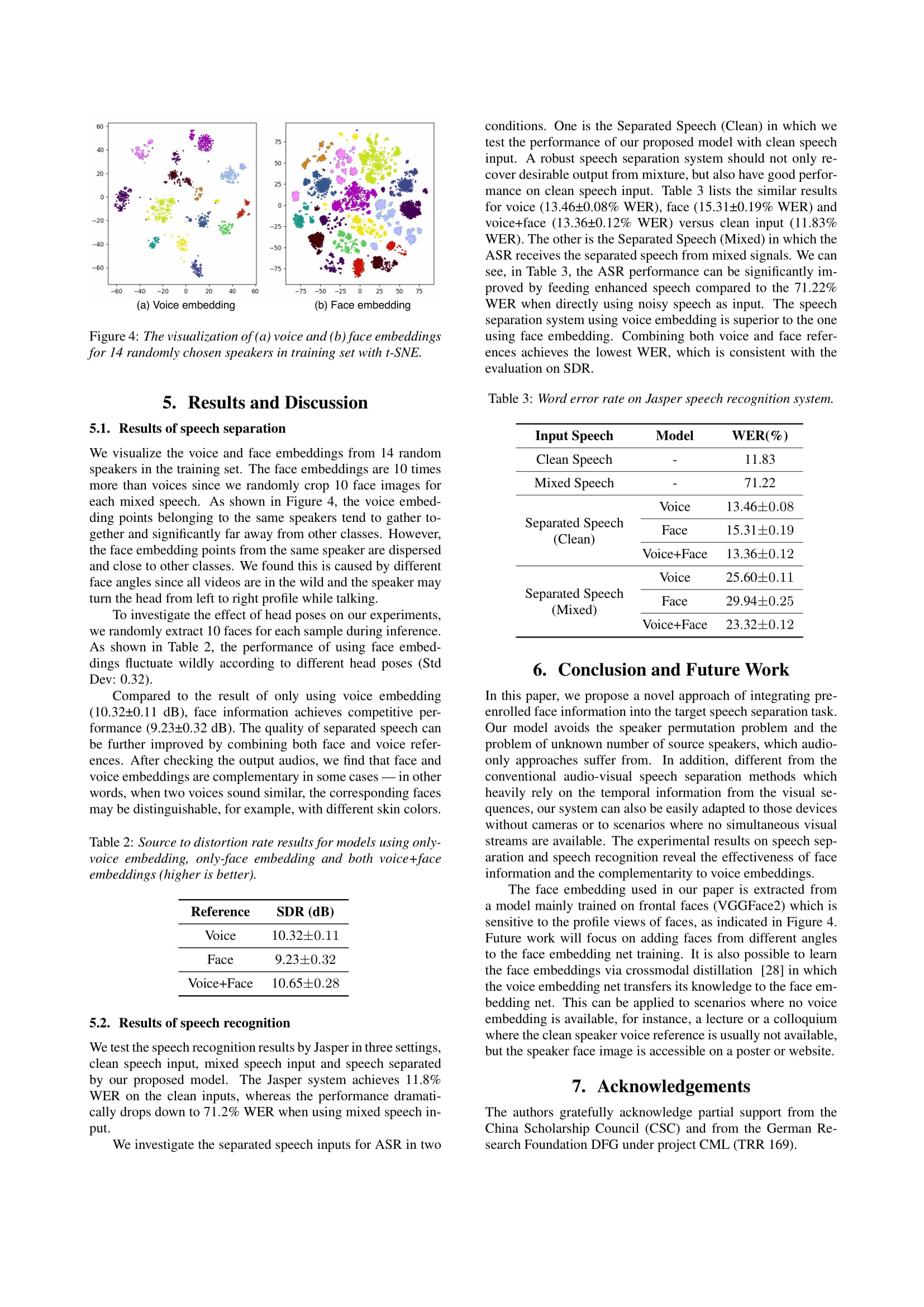

|---|

|

Abstract

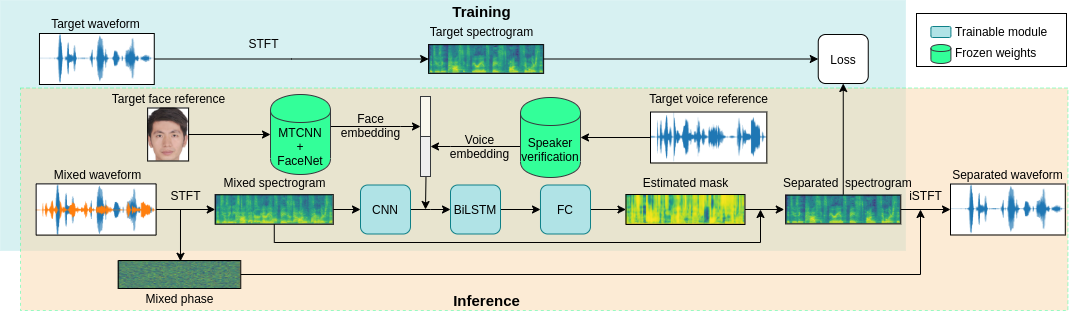

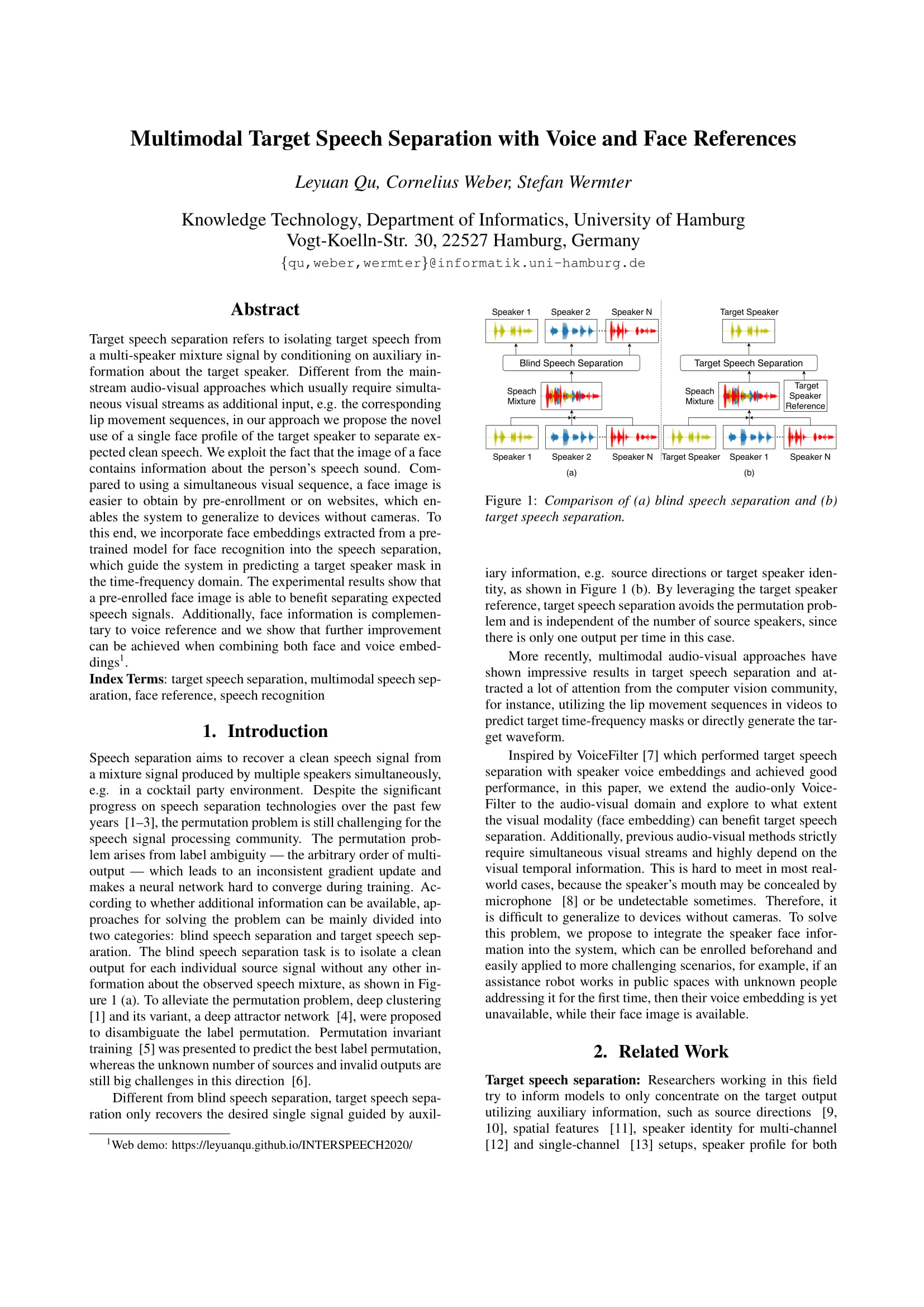

Target speech separation refers to isolating target speech from a multi-speaker mixture signal by conditioning on auxiliary information about the target speaker. Different from the mainstream audio-visual approaches which usually require simultaneous visual streams as additional input, e.g. the corresponding lip movement sequences, in this work we propose the novel use of a single face profile of the target speaker to separate expected clean speech. We exploit the fact that the image of a face contains information about the person's speech sound. Compared to using a simultaneous visual sequence, a face image is easier to obtain by pre-enrollment or on websites, which enables the system to generalize to devices without cameras. To this end, we incorporate face embeddings extracted from a pretrained model for face recognition into the speech separation, which guide the system in predicting a target speaker mask in the time-frequency domain. The experimental results show that a pre-enrolled face image is able to benefit separating expected speech signals. Additionally, face information is complementary to voice reference and further improvement can be achieved when combing both face and voice embeddings.

Architecture

Demos

| Video | Mixed wav | Target wav | ||

|---|---|---|---|---|

Reference embeddings |

Separated wav |

|||

| Voice | ||||

| Face |  |

|||

| Voice + Face |

| Video | Mixed wav | Target wav | ||

|---|---|---|---|---|

Reference embeddings |

Separated wav |

|||

| Voice | ||||

| Face |  |

|||

| Voice + Face |

| Video | Mixed wav | Target wav | ||

|---|---|---|---|---|

Reference embeddings |

Separated wav |

|||

| Voice | ||||

| Face |  |

|||

| Voice + Face |

| Video | Mixed wav | Target wav | ||

|---|---|---|---|---|

Reference embeddings |

Separated wav |

|||

| Voice | ||||

| Face |  |

|||

| Voice + Face |

More Samples

| Mixed wav | Target wav | Reference | Separated wav |

|---|---|---|---|

|

|||

| Voice + Face |

|

|||

| Voice + Face |

|---|

|

|||

| Voice + Face |

|---|

|

|||

| Voice + Face |

|---|

|

|||

| Voice + Face |

|---|

|

|||

| Voice + Face |

|---|

|

|||

| Voice + Face |

|---|

BibTeX

@inproceedings{qu2020multimodal,

author = {Leyuan Qu, Cornelius Weber and Stefan Wermter},

title = {Multimodal Target Speech Separation with Voice and Face References},

booktitle = {{INTERSPEECH}},

year = {2020}

}

Paper Link

Acknowledgment

The authors gratefully acknowledge the partial support from the China Scholarship Council (CSC), the German Research Foundation DFG under project CML (TRR 169), and the European Union under project SECURE (No 642667).